infrastructure.aws

TCO(total cost of ownership) Calculator

Pricing Calculator

The AWS CLI is already installed on Amazon Linux 2.

Install Python 3:

1 sudo yum install -y python3-pip python3 python3-setuptools

Install Boto3:

1 pip3 install boto3 --user

Install Python3 using Homebrew:

1 ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install) "

Install Python 3:

Insert the Homebrew Python directory at the top of your PATH environment variable:

1 export PATH="/usr/local/opt/python/libexec/bin:$PATH "

Verify you are using Python 3:

Install the AWS CLI and Boto3:

1 pip install awscli boto3 --upgrade --user

1 sudo amazon-linux-extras install docker

Obtain your AWS access key and secret access key from the AWS Management Console. Run the following command:

This sets up a text file that the AWS CLI and Boto3 libraries look at by default for your credentials: ~/.aws/credentials.

The file should look like this:

1 2 3 [default] aws_access_key_id = AKIAIOSFODNN7EXAMPLE aws_secret_access_key = wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

AWS CLI

1 aws sts get-caller-identity

The output should look like this:

1 2 3 4 5 { "UserId" : "AIDAJKLMNOPQRSTUVWXYZ" , "Account" : "123456789012" , "Arn" : "arn:aws:iam::123456789012:userdevuser" }

1 2 aws configure aws sts get-caller-identity

Upload folder to s3:

1 aws s3 cp <path-to-source-folder> s3://<path-to-target-folder> --recursive --exclude ".DS_Store"

create table:

1 2 3 4 5 6 7 8 9 aws dynamodb create-table \ --table-name Music \ --key-schema AttributeName=Artist,KeyType=HASH \ AttributeName=SongTitle,KeyType=RANGE \ --attribute-definitions \ AttributeName=Artist,AttributeType=S \ AttributeName=SongTitle,AttributeType=S \ --provisioned-throughput \ ReadCapacityUnits=5,WriteCapacityUnits=5

describe table:

1 aws dynamodb describe-table --table-name Music

put item:

1 2 3 4 5 6 aws dynamodb put-item \ --table-name Music \ --item '{ "Artist": {"S": "Dream Theater"}, "AlbumTitle": {"S": "Images and Words"}, "SongTitle": {"S": "Under a Glass Moon"} }'

scan table:

1 aws dynamodb scan --table-name Music

This section follows https://github.com/linuxacademy/content-dynamodb-deepdive

1 2 3 4 import boto3s3 = boto3.resource('s3' ) for bucket in s3.buckets.all (): print (bucket.name)

1 2 3 4 5 6 7 8 9 10 11 import boto3ec2 = boto3.client('ec2' ) response = ec2.run_instances( ImageId='ami-0947d2ba12ee1ff75' , InstanceType='t2.micro' , KeyName='xyshell' , MinCount=1 , MaxCount=1 , SubnetId='subnet-05b78e3323bcabddc' ) print (response['Instances' ][0 ]['InstanceId' ])

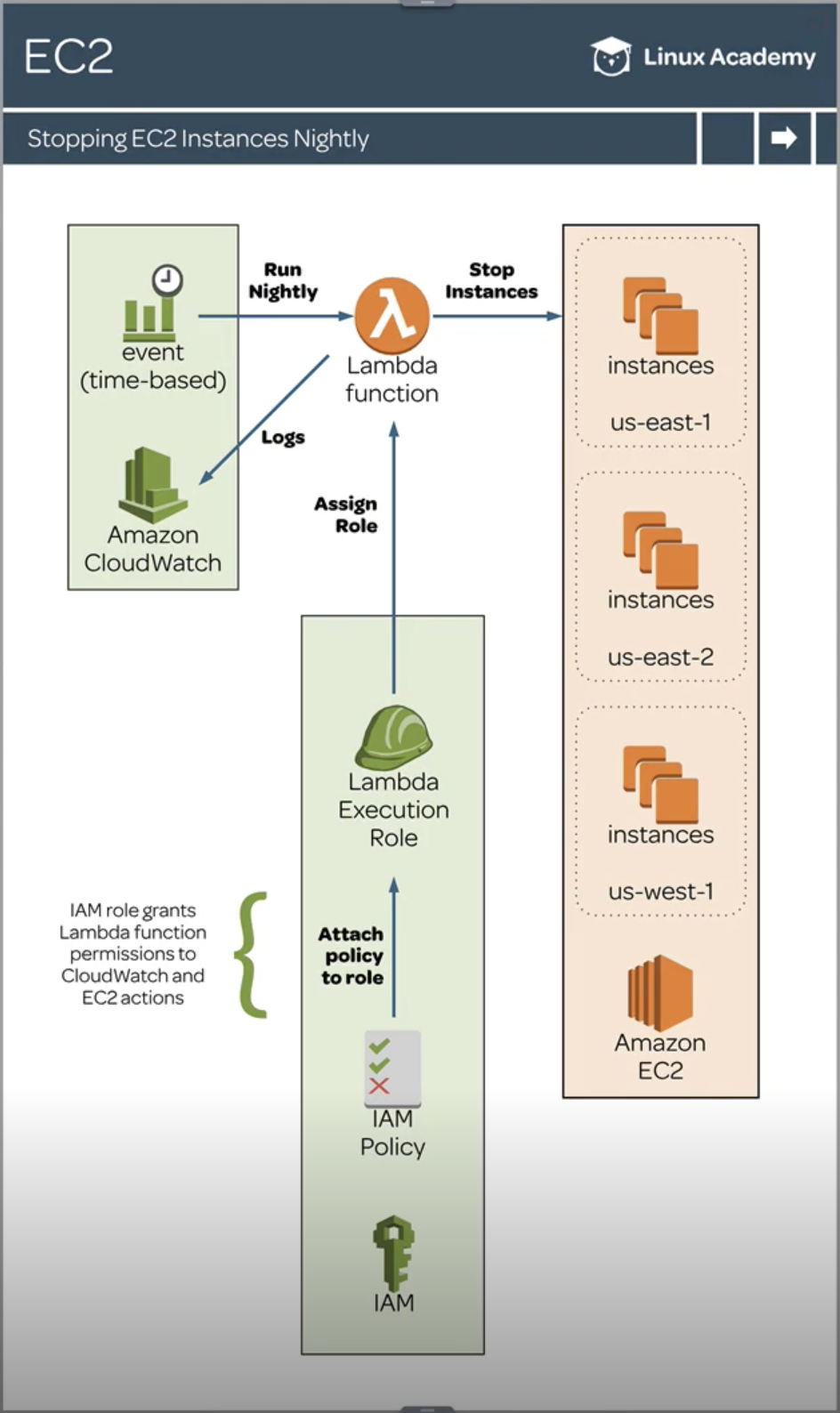

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 import boto3def lambda_handler (event, context ): ec2_client = boto3.client('ec2' ) regions = [region['RegionName' ] for region in ec2_client.describe_regions()['Regions' ]] for region in regions: ec2 = boto3.resource('ec2' , region_name=region) print ('Region:' , region) instances = ec2.instances.filter ( Filters=[ {'Name' : 'instance-state-name' , 'Values' : ['running' ]} ] ) for instance in instances: instance.stop() print ('stopped instance:' , instance.id )

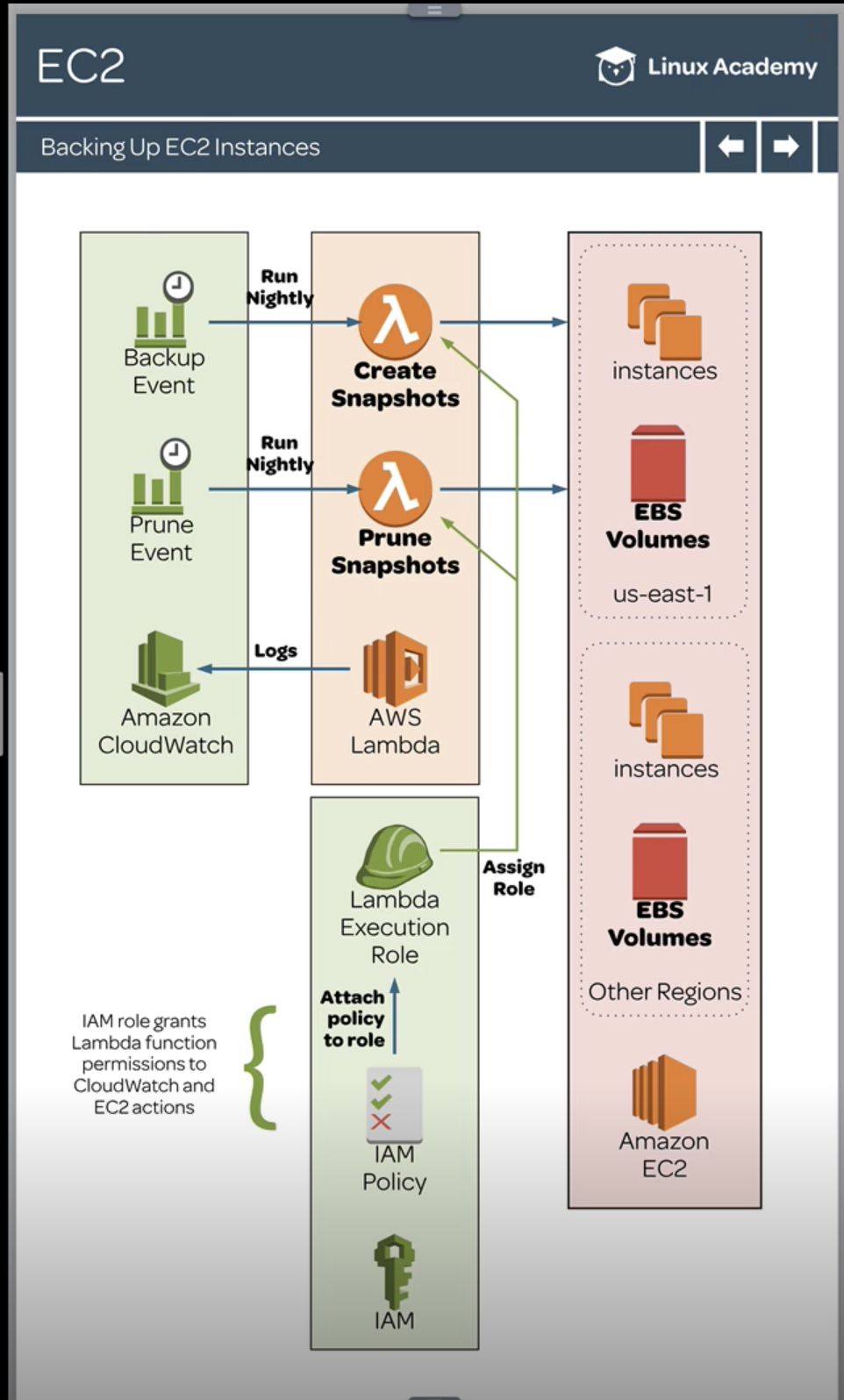

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 from datetime import datetimeimport boto3def lambda_handler (event, context ): ec2_client = boto3.client('ec2' ) regions = [region['RegionName' ] for region in ec2_client.describe_regions()['Regions' ]] for region in regions: print ('Instances in EC2 Region {0}:' .format (region)) ec2 = boto3.resource('ec2' , region_name=region) instances = ec2.instances.filter ( Filters=[ {'Name' : 'tag:backup' , 'Values' : ['true' ]} ] ) timestamp = datetime.utcnow().replace(microsecond=0 ).isoformat() for i in instances.all (): for v in i.volumes.all (): desc = 'Backup of {0}, volume {1}, created {2}' .format ( i.id , v.id , timestamp) print (desc) snapshot = v.create_snapshot(Description=desc) print ("Created snapshot:" , snapshot.id )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 import boto3def lambda_handler (event, context ): account_id = boto3.client('sts' ).get_caller_identity().get('Account' ) ec2 = boto3.client('ec2' ) regions = [region['RegionName' ] for region in ec2.describe_regions()['Regions' ]] for region in regions: print ("Region:" , region) ec2 = boto3.client('ec2' , region_name=region) response = ec2.describe_snapshots(OwnerIds=[account_id]) snapshots = response["Snapshots" ] snapshots.sort(key=lambda x: x["StartTime" ]) snapshots = snapshots[:-3 ] for snapshot in snapshots: id = snapshot['SnapshotId' ] try : print ("Deleting snapshot:" , id ) ec2.delete_snapshot(SnapshotId=id ) except Exception as e: print ("Snapshot {} in use, skipping." .format (id )) continue

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 import boto3def lambda_handler (object , context ec2_client = boto3.client('ec2' ) regions = [region['RegionName' ] for region in ec2_client.describe_regions()['Regions' ]] for region in regions: ec2 = boto3.resource('ec2' , region_name=region) print ("Region:" , region) volumes = ec2.volumes.filter ( Filters=[{'Name' : 'status' , 'Values' : ['available' ]}]) for volume in volumes: v = ec2.Volume(volume.id ) print ("Deleting EBS volume: {}, Size: {} GiB" .format (v.id , v.size)) v.delete()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 import datetimefrom dateutil.parser import parseimport boto3def days_old (date ): parsed = parse(date).replace(tzinfo=None ) diff = datetime.datetime.now() - parsed return diff.days def lambda_handler (event, context ): ec2_client = boto3.client('ec2' ) regions = [region['RegionName' ] for region in ec2_client.describe_regions()['Regions' ]] for region in regions: ec2 = boto3.client('ec2' , region_name=region) print ("Region:" , region) amis = ec2.describe_images(Owners=['self' ])['Images' ] for ami in amis: creation_date = ami['CreationDate' ] age_days = days_old(creation_date) image_id = ami['ImageId' ] print ('ImageId: {}, CreationDate: {} ({} days old)' .format ( image_id, creation_date, age_days)) if age_days >= 2 : print ('Deleting ImageId:' , image_id) ec2.deregister_image(ImageId=image_id)

This chapter follows https://github.com/linuxacademy/content-lambda-boto3

1 2 3 import boto3client = boto3.client('dynamodb' , endpoint_url='http://localhost:8000' ) client.list_tables()

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 dynamodb = boto3.resource('dynamodb' ) table = dynamodb.create_table( TableName='Movies' , KeySchema=[ { 'AttributeName' : 'year' , 'KeyType' : 'HASH' }, { 'AttributeName' : 'title' , 'KeyType' : 'RANGE' } ], AttributeDefinitions=[ { 'AttributeName' : 'year' , 'AttributeType' : 'N' }, { 'AttributeName' : 'title' , 'AttributeType' : 'S' }, ], ProvisionedThroughput={ 'ReadCapacityUnits' : 5 , 'WriteCapacityUnits' : 5 } ) print ('Table status:' , table.table_status)print ('Waiting for' , table.name, 'to complete creating...' )table.meta.client.get_waiter('table_exists' ).wait(TableName='Movies' ) print ('Table status:' , dynamodb.Table('Movies' ).table_status)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 dynamodb = boto3.resource('dynamodb' ) table = dynamodb.Table('Movies' ) with open ("moviedata.json" ) as json_file: movies = json.load(json_file, parse_float=decimal.Decimal) for movie in movies: year = int (movie['year' ]) title = movie['title' ] info = movie['info' ] print ("Adding movie:" , year, title) table.put_item( Item={ 'year' : year, 'title' : title, 'info' : info, } )

moviedata.json:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [ { "year" : 2013 , "title" : "Rush" , "info" : { "directors" : [ "Ron Howard" ] , "release_date" : "2013-09-02T00:00:00Z" , "rating" : 8.3 , "genres" : [ "Action" , "Biography" , "Drama" , "Sport" ] , "image_url" : "http://ia.media-imdb.com/images/M/MV5BMTQyMDE0MTY0OV5BMl5BanBnXkFtZTcwMjI2OTI0OQ@@._V1_SX400_.jpg" , "plot" : "A re-creation of the merciless 1970s rivalry between Formula One rivals James Hunt and Niki Lauda." , "rank" : 2 , "running_time_secs" : 7380 , "actors" : [ "Daniel Bruhl" , "Chris Hemsworth" , "Olivia Wilde" ] } } ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 class DecimalEncoder (json.JSONEncoder): '''Helper class to convert a DynamoDB item to JSON''' def default (self, o ): if isinstance (o, decimal.Decimal): if abs (o) % 1 > 0 : return float (o) else : return int (o) return super (DecimalEncoder, self ).default(o) title = "The Big New Movie" year = 2015 response = table.put_item( Item={ 'year' : year, 'title' : title, 'info' : { 'plot' : "Nothing happens at all." , 'rating' : decimal.Decimal(0 ) } } ) print ("PutItem succeeded:" )print (json.dumps(response, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 from botocore.exceptions import ClientErrortitle = "The Big New Movie" year = 2015 try : response = table.get_item( Key={ 'year' : year, 'title' : title } ) except ClientError as e: print (e.response['Error' ]['Message' ]) else : item = response['Item' ] print ("GetItem succeeded:" ) print (json.dumps(item, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 title = "The Big New Movie" year = 2015 response = table.update_item( Key={ 'year' : year, 'title' : title }, UpdateExpression="set info.rating = :r, info.plot=:p, info.actors=:a" , ExpressionAttributeValues={ ':r' : decimal.Decimal(5.5 ), ':p' : "Everything happens all at once." , ':a' : ["Larry" , "Moe" , "Curly" ] }, ReturnValues="UPDATED_NEW" ) print ("UpdateItem succeeded:" )print (json.dumps(response, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 title = "The Big New Movie" year = 2015 response = table.update_item( Key={ 'year' : year, 'title' : title }, UpdateExpression="set info.rating = info.rating + :val" , ExpressionAttributeValues={ ':val' : decimal.Decimal(1 ) }, ReturnValues="UPDATED_NEW" ) print ("UpdateItem succeeded:" )print (json.dumps(response, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 title = "The Big New Movie" year = 2015 print ("Attempting conditional update..." )try : response = table.update_item( Key={ 'year' : year, 'title' : title }, UpdateExpression="remove info.actors[0]" , ConditionExpression="size(info.actors) >= :num" , ExpressionAttributeValues={ ':num' : 3 }, ReturnValues="UPDATED_NEW" ) except ClientError as e: if e.response['Error' ]['Code' ] == "ConditionalCheckFailedException" : print (e.response['Error' ]['Message' ]) else : raise else : print ("UpdateItem succeeded:" ) print (json.dumps(response, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 title = "The Big New Movie" year = 2015 print ("Attempting a conditional delete..." )try : response = table.delete_item( Key={ 'year' : year, 'title' : title } ) except ClientError as e: if e.response['Error' ]['Code' ] == "ConditionalCheckFailedException" : print (e.response['Error' ]['Message' ]) else : raise else : print ("DeleteItem succeeded:" ) print (json.dumps(response, indent=4 , cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 from boto3.dynamodb.conditions import Keyprint ("Movies from 1985" )response = table.query( KeyConditionExpression=Key('year' ).eq(1985 ) ) for i in response['Items' ]: print (i['year' ], ":" , i['title' ])

1 2 3 4 5 6 7 8 9 10 11 12 print ("Movies from 1992 - titles A-L, with genres and lead actor" )response = table.query( ProjectionExpression="#yr, title, info.genres, info.actors[0]" , ExpressionAttributeNames={"#yr" : "year" }, KeyConditionExpression=Key('year' ).eq( 1992 ) & Key('title' ).between('A' , 'L' ) ) for i in response[u'Items' ]: print (json.dumps(i, cls=DecimalEncoder))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 fe = Key('year' ).between(1950 , 1959 ) pe = "#yr, title, info.rating" ean = {"#yr" : "year" , } esk = None response = table.scan( FilterExpression=fe, ProjectionExpression=pe, ExpressionAttributeNames=ean ) for i in response['Items' ]: print (json.dumps(i, cls=DecimalEncoder)) while 'LastEvaluatedKey' in response: response = table.scan( ProjectionExpression=pe, FilterExpression=fe, ExpressionAttributeNames=ean, ExclusiveStartKey=response['LastEvaluatedKey' ] ) for i in response['Items' ]: print (json.dumps(i, cls=DecimalEncoder))

1 2 3 4 5 6 7 import boto3dynamodb = boto3.resource('dynamodb' ) table = dynamodb.Table('Movies' ) table.delete()

This chapter follows https://github.com/linuxacademy/content-lambda-boto3

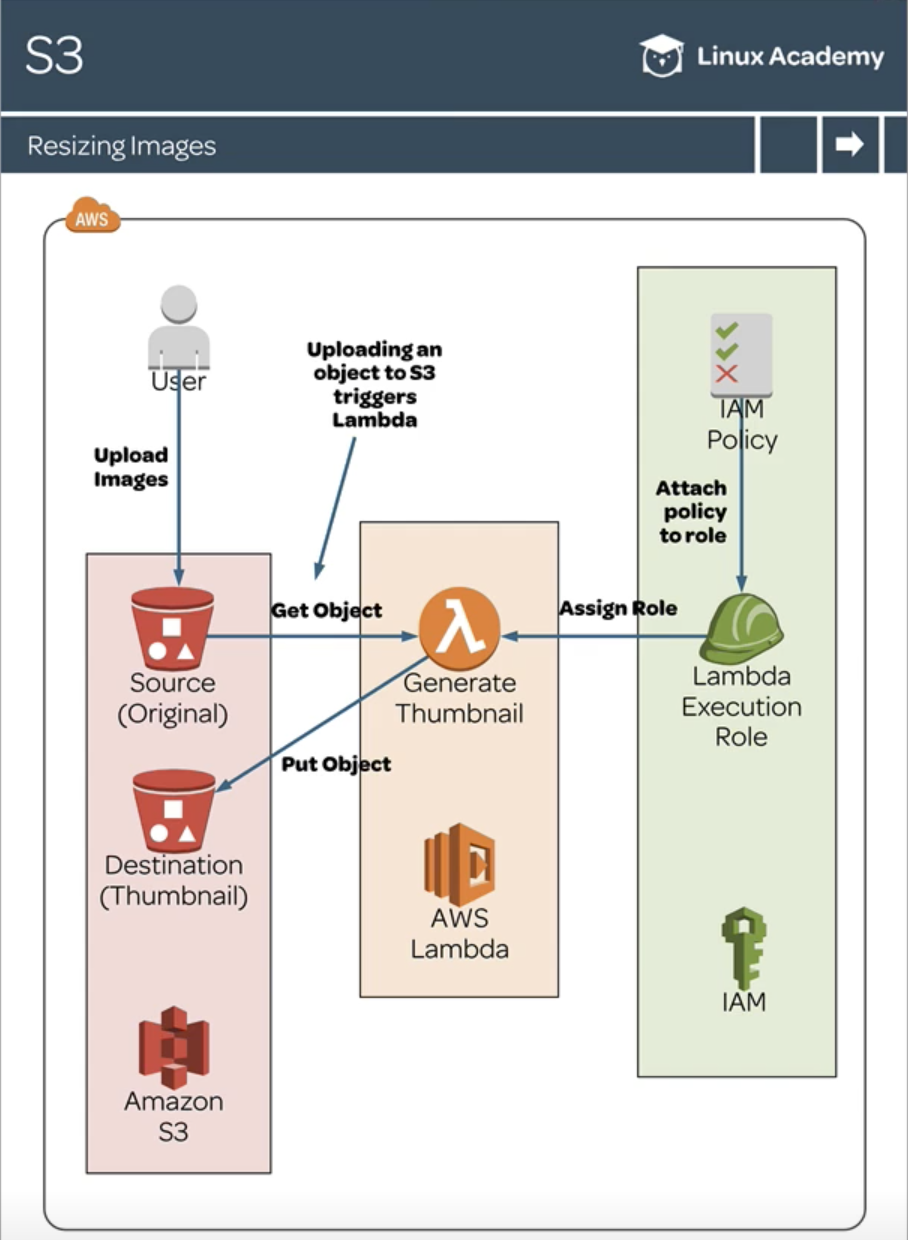

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 import osimport tempfileimport boto3from PIL import Images3 = boto3.client('s3' ) DEST_BUCKET = os.environ['DEST_BUCKET' ] SIZE = 128 , 128 def lambda_handler (event, context ): for record in event['Records' ]: source_bucket = record['s3' ]['bucket' ]['name' ] key = record['s3' ]['object' ]['key' ] thumb = 'thumb-' + key with tempfile.TemporaryDirectory() as tmpdir: download_path = os.path.join(tmpdir, key) upload_path = os.path.join(tmpdir, thumb) s3.download_file(source_bucket, key, download_path) generate_thumbnail(download_path, upload_path) s3.upload_file(upload_path, DEST_BUCKET, thumb) print ('Thumbnail image saved at {}/{}' .format (DEST_BUCKET, thumb)) def generate_thumbnail (source_path, dest_path ): print ('Generating thumbnail from:' , source_path) with Image.open (source_path) as image: image.thumbnail(SIZE) image.save(dest_path)

To get pillow pkg, find it at https://pypi.org/project/Pillow/

1 unzip Pillow-5.4.1-cp37-cp37m-manylinux1_x86_64.whl

1 rm -rf Pillow-5.4.1.dist-info

1 zip -r9 lambda.zip lambda_function.py PIL

upload the zip file to AWS Lambda.

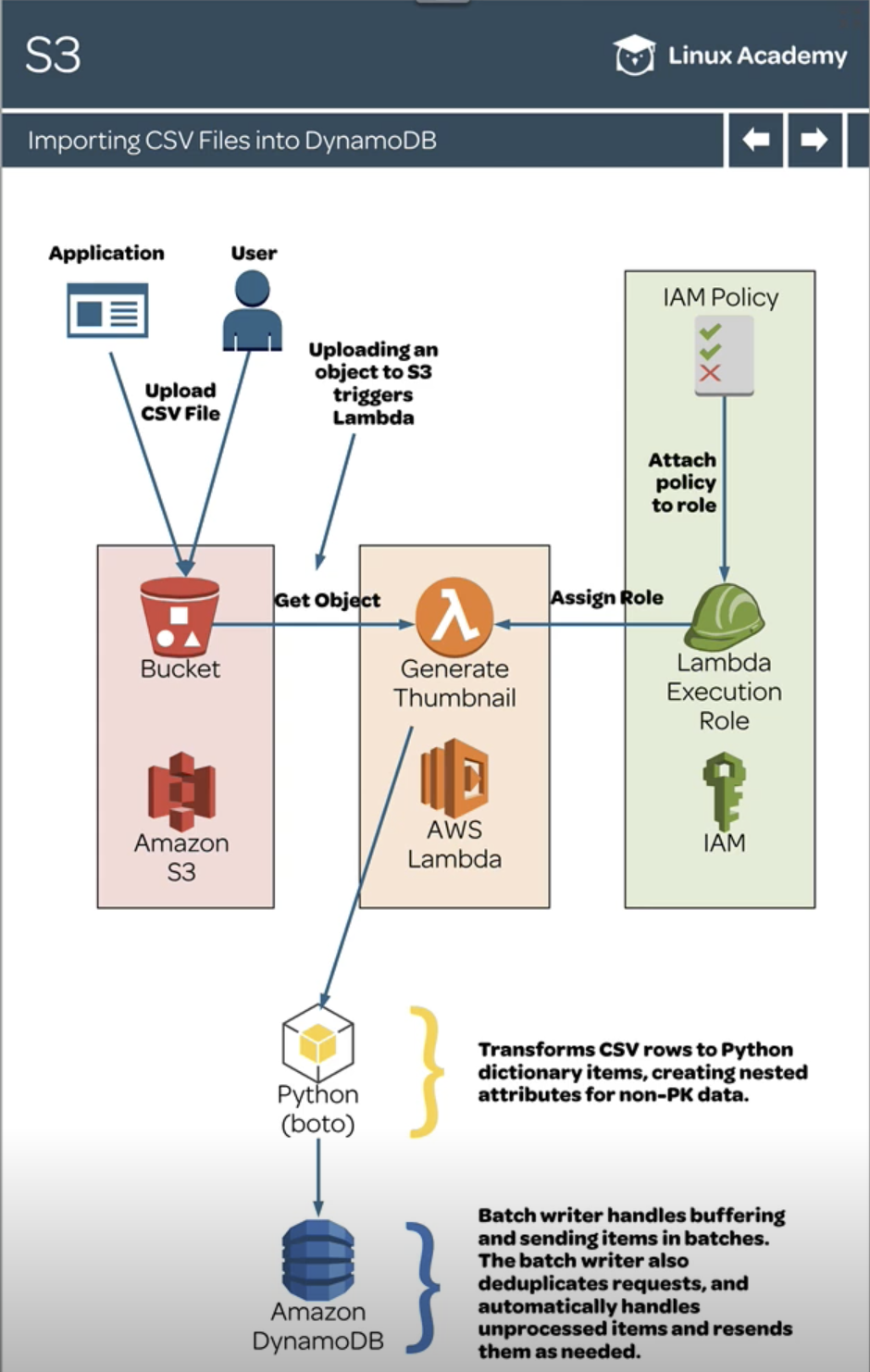

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 import csvimport osimport tempfileimport boto3dynamodb = boto3.resource('dynamodb' ) table = dynamodb.Table('Movies' ) s3 = boto3.client('s3' ) def lambda_handler (event, context ): for record in event['Records' ]: source_bucket = record['s3' ]['bucket' ]['name' ] key = record['s3' ]['object' ]['key' ] with tempfile.TemporaryDirectory() as tmpdir: download_path = os.path.join(tmpdir, key) s3.download_file(source_bucket, key, download_path) items = read_csv(download_path) with table.batch_writer() as batch: for item in items: batch.put_item(Item=item) def read_csv (file ): items = [] with open (file) as csvfile: reader = csv.DictReader(csvfile) for row in reader: data = {} data['Meta' ] = {} data['Year' ] = int (row['Year' ]) data['Title' ] = row['Title' ] or None data['Meta' ]['Length' ] = int (row['Length' ] or 0 ) data['Meta' ]['Subject' ] = row['Subject' ] or None data['Meta' ]['Actor' ] = row['Actor' ] or None data['Meta' ]['Actress' ] = row['Actress' ] or None data['Meta' ]['Director' ] = row['Director' ] or None data['Meta' ]['Popularity' ] = row['Popularity' ] or None data['Meta' ]['Awards' ] = row['Awards' ] == 'Yes' data['Meta' ]['Image' ] = row['Image' ] or None data['Meta' ] = {k: v for k, v in data['Meta' ].items() if v is not None } items.append(data) return items

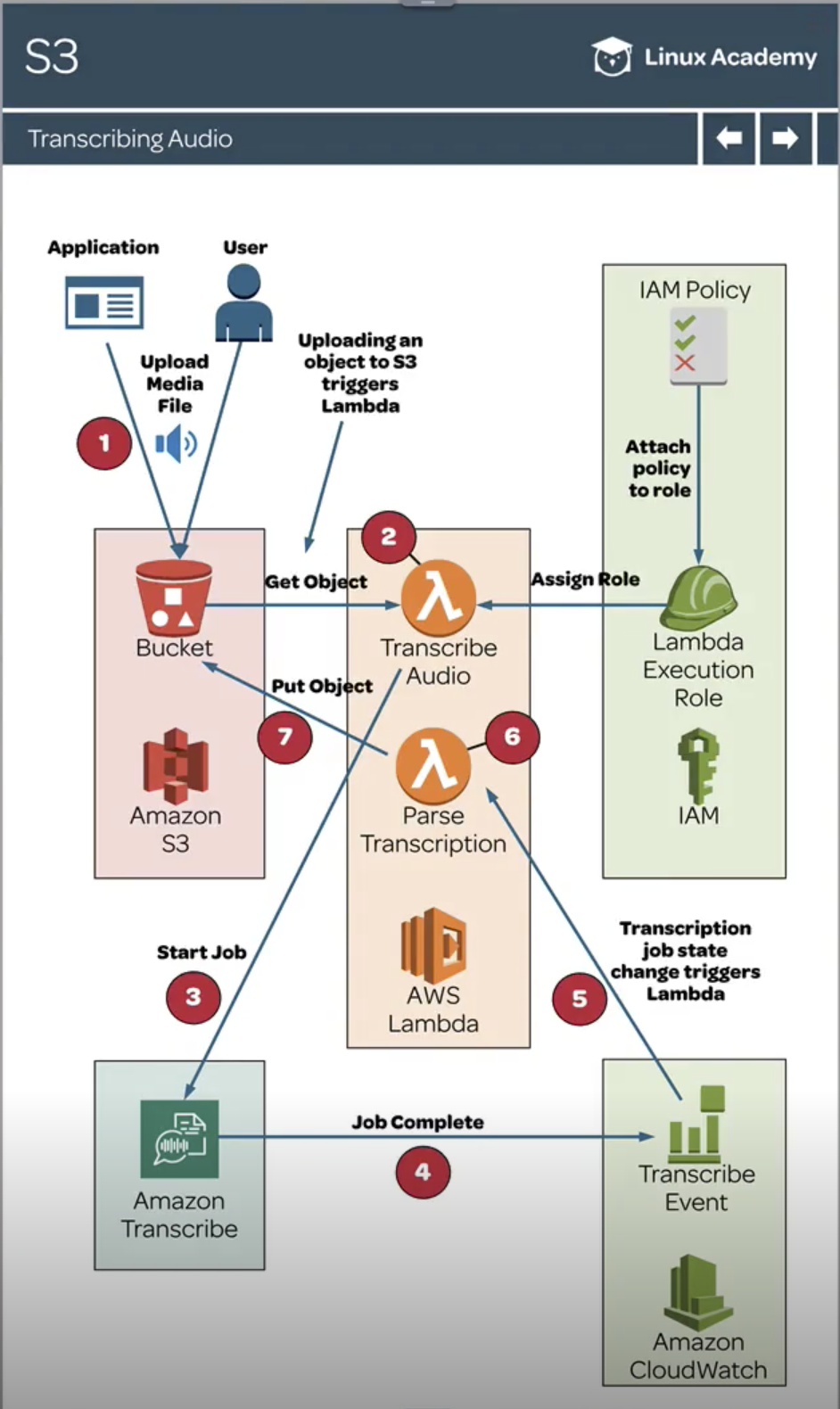

TranscribeAudio:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 import boto3s3 = boto3.client('s3' ) transcribe = boto3.client('transcribe' ) def lambda_handler (event, context ): for record in event['Records' ]: source_bucket = record['s3' ]['bucket' ]['name' ] key = record['s3' ]['object' ]['key' ] object_url = "https://s3.amazonaws.com/{0}/{1}" .format ( source_bucket, key) response = transcribe.start_transcription_job( TranscriptionJobName='MyTranscriptionJob' , Media={'MediaFileUri' : object_url}, MediaFormat='mp3' , LanguageCode='en-US' ) print (response)

ParseTranscription:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 import jsonimport osimport urllib.requestimport boto3BUCKET_NAME = os.environ['BUCKET_NAME' ] s3 = boto3.resource('s3' ) transcribe = boto3.client('transcribe' ) def lambda_handler (event, context ): job_name = event['detail' ]['TranscriptionJobName' ] job = transcribe.get_transcription_job(TranscriptionJobName=job_name) uri = job['TranscriptionJob' ]['Transcript' ]['TranscriptFileUri' ] print (uri) content = urllib.request.urlopen(uri).read().decode('UTF-8' ) print (json.dumps(content)) data = json.loads(content) text = data['results' ]['transcripts' ][0 ]['transcript' ] object = s3.Object(BUCKET_NAME, job_name + '-asrOutput.txt' ) object .put(Body=text)

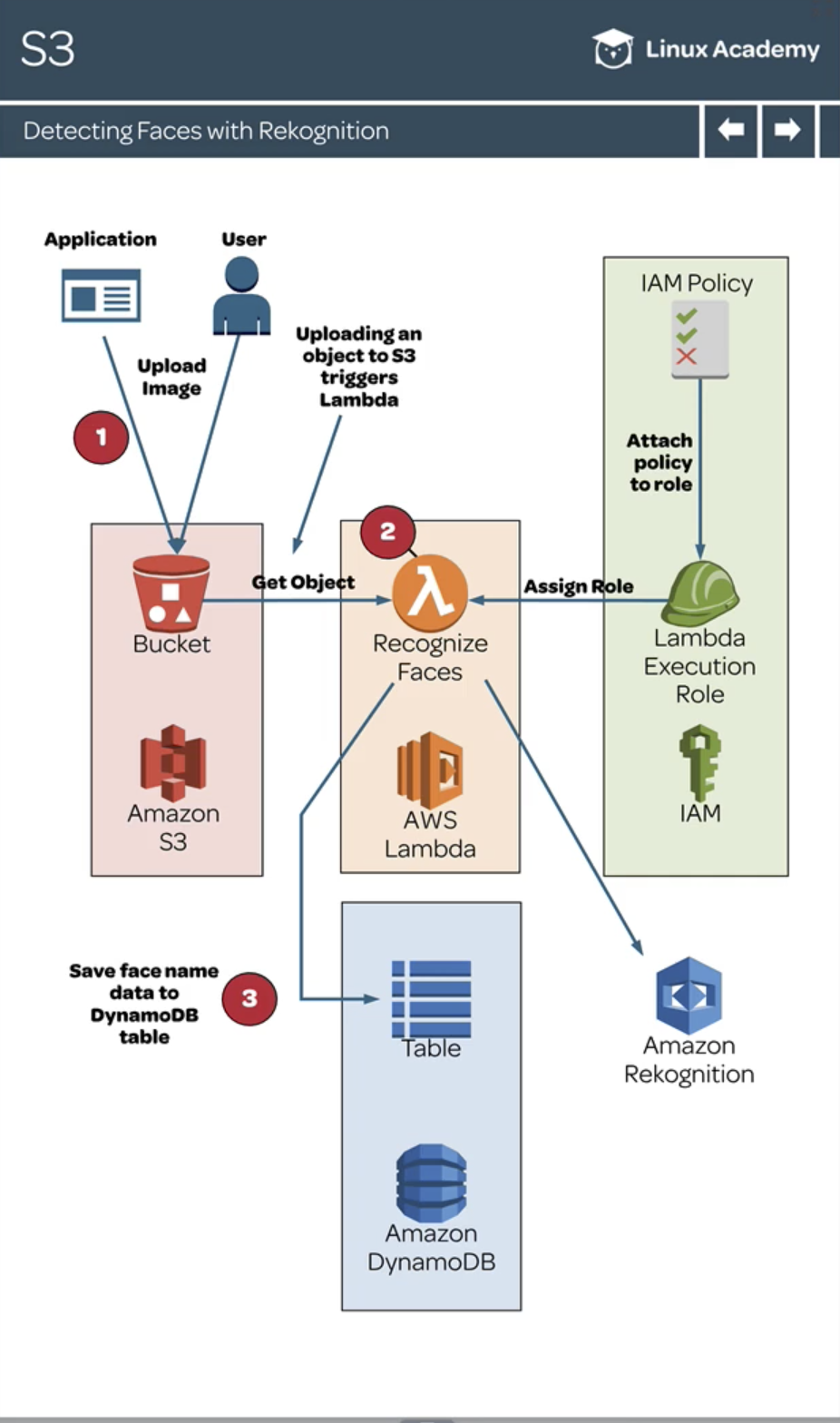

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 import osimport boto3TABLE_NAME = os.environ['TABLE_NAME' ] dynamodb = boto3.resource('dynamodb' ) table = dynamodb.Table(TABLE_NAME) s3 = boto3.resource('s3' ) rekognition = boto3.client('rekognition' ) def lambda_handler (event, context ): bucket = event['Records' ][0 ]['s3' ]['bucket' ]['name' ] key = event['Records' ][0 ]['s3' ]['object' ]['key' ] obj = s3.Object(bucket, key) image = obj.get()['Body' ].read() print ('Recognizing celebrities...' ) response = rekognition.recognize_celebrities(Image={'Bytes' : image}) names = [] for celebrity in response['CelebrityFaces' ]: name = celebrity['Name' ] print ('Name: ' + name) names.append(name) print (names) print ('Saving face data to DynamoDB table:' , TABLE_NAME) response = table.put_item( Item={ 'key' : key, 'names' : names, } ) print (response)

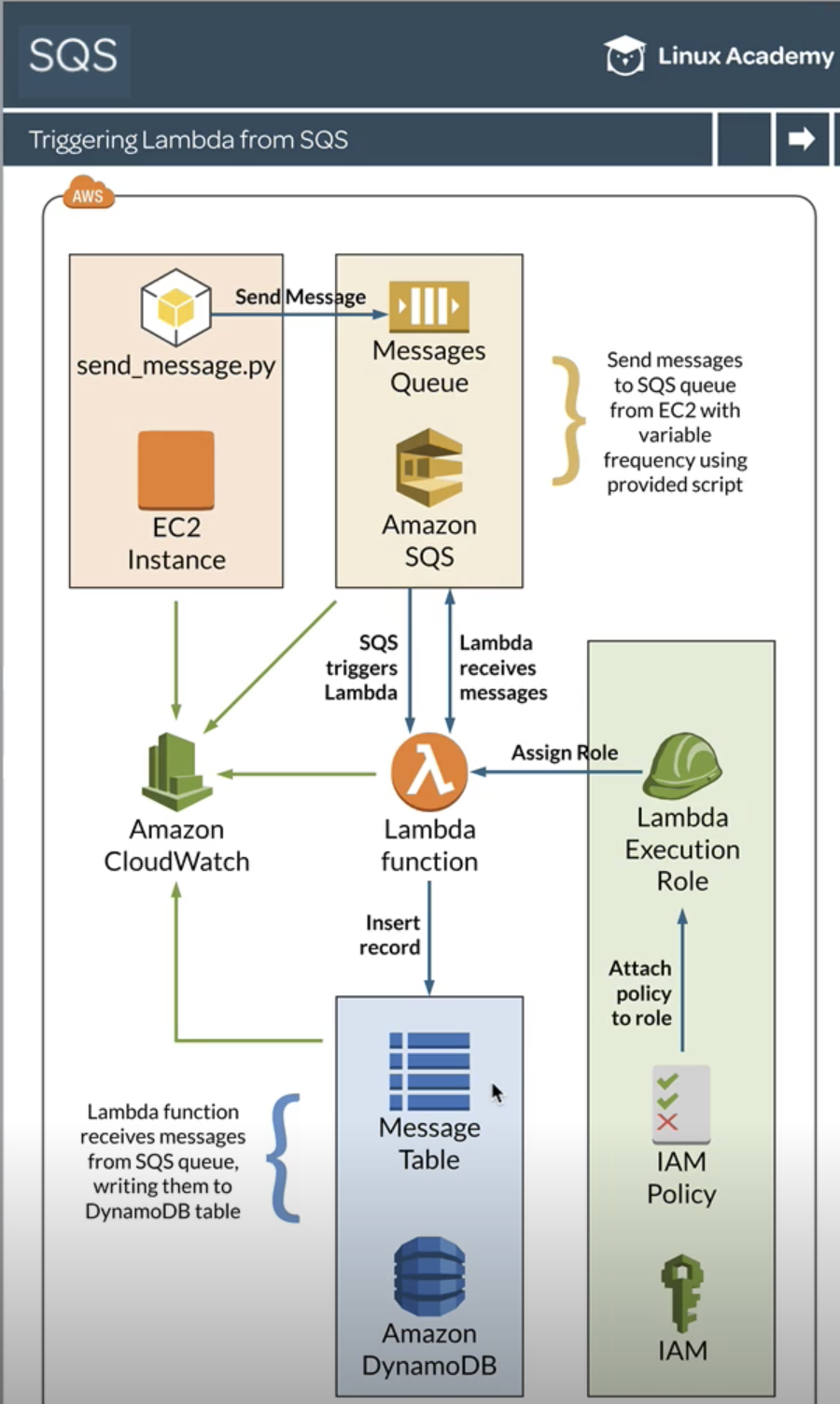

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 import jsonimport osfrom datetime import datetimeimport boto3QUEUE_NAME = os.environ['QUEUE_NAME' ] MAX_QUEUE_MESSAGES = os.environ['MAX_QUEUE_MESSAGES' ] DYNAMODB_TABLE = os.environ['DYNAMODB_TABLE' ] sqs = boto3.resource('sqs' ) dynamodb = boto3.resource('dynamodb' ) def lambda_handler (event, context ): queue = sqs.get_queue_by_name(QueueName=QUEUE_NAME) print ("ApproximateNumberOfMessages:" , queue.attributes.get('ApproximateNumberOfMessages' )) for message in queue.receive_messages( MaxNumberOfMessages=int (MAX_QUEUE_MESSAGES)): print (message) table = dynamodb.Table(DYNAMODB_TABLE) response = table.put_item( Item={ 'MessageId' : message.message_id, 'Body' : message.body, 'Timestamp' : datetime.now().isoformat() } ) print ("Wrote message to DynamoDB:" , json.dumps(response)) message.delete() print ("Deleted message:" , message.message_id)

send_message.py:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 import argparseimport loggingimport sysfrom time import sleepimport boto3from faker import Fakerparser = argparse.ArgumentParser() parser.add_argument("--queue-name" , "-q" , required=True , help ="SQS queue name" ) parser.add_argument("--interval" , "-i" , required=True , help ="timer interval" , type =float ) parser.add_argument("--message" , "-m" , help ="message to send" ) parser.add_argument("--log" , "-l" , default="INFO" , help ="logging level" ) args = parser.parse_args() if args.log: logging.basicConfig( format ='[%(levelname)s] %(message)s' , level=args.log) else : parser.print_help(sys.stderr) sqs = boto3.client('sqs' ) response = sqs.get_queue_url(QueueName=args.queue_name) queue_url = response['QueueUrl' ] logging.info(queue_url) while True : message = args.message if not args.message: fake = Faker() message = fake.text() logging.info('Sending message: ' + message) response = sqs.send_message( QueueUrl=queue_url, MessageBody=message) logging.info('MessageId: ' + response['MessageId' ]) sleep(args.interval)

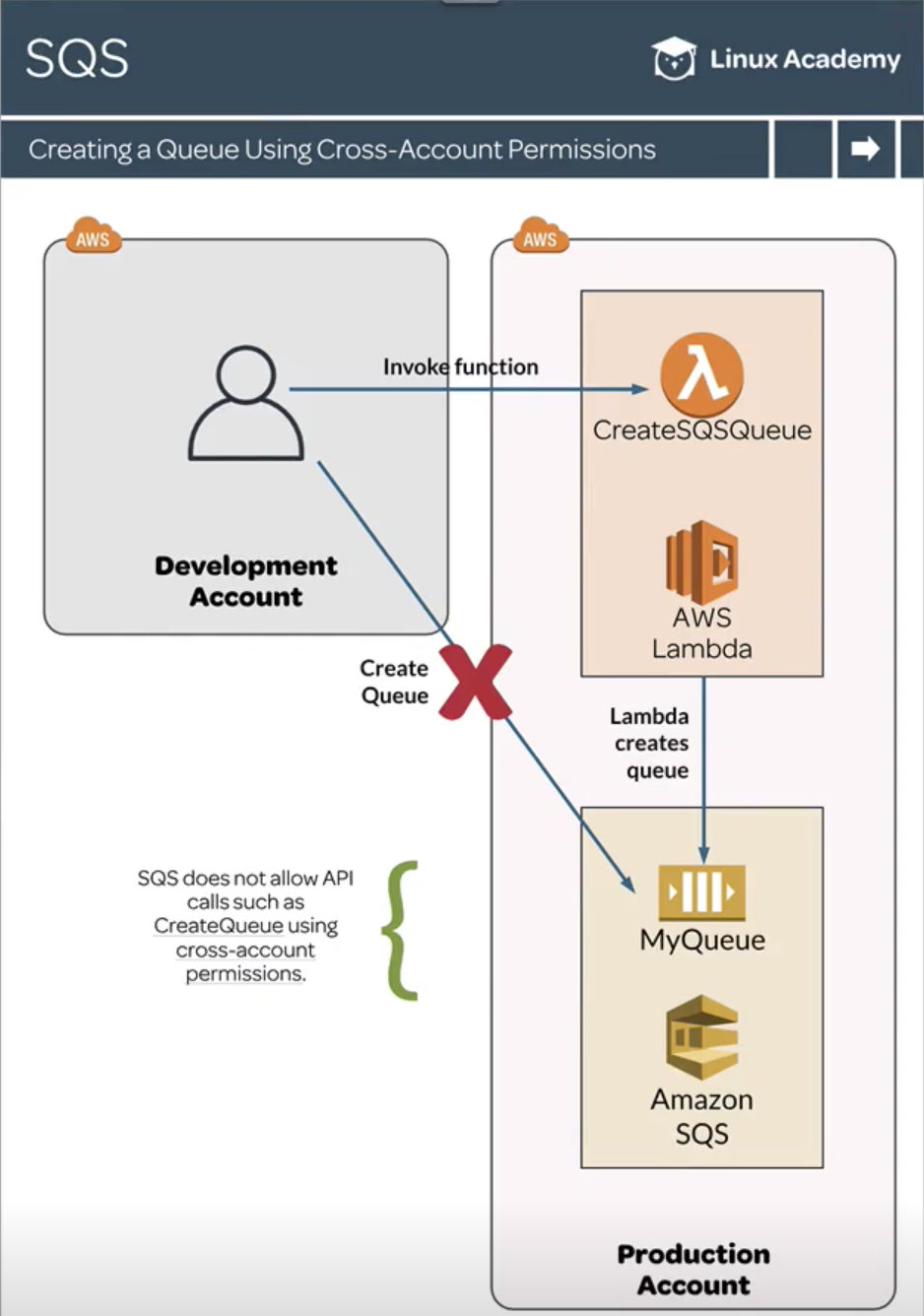

SQS does not allow API calls such as CreateQueue using cross-account permissions. A workaround is to create and invoke a Lambda function in another account in order to call that API.

Create AWS CLI Profiles

1 2 3 4 aws configure --profile devadmin Production account admin: aws configure --profile prodadmin

Create a Lambda Function in the Production Account

See lambda_function.py and assign the role lambda_execution_role.json.

Assign Permissions to the Lambda Function

1 2 3 4 5 6 7 aws lambda add-permission \ --function-name CreateSQSQueue \ --statement-id DevAccountAccess \ --action 'lambda:InvokeFunction' \ --principal 'arn:aws:iam::__DEVELOPMENT_ACCOUNT_NUMBER__:user/devadmin' \ --region us-east-2 \ --profile prodadmin

To view the policy:

1 2 3 4 aws lambda get-policy \ --function-name CreateSQSQueue \ --region us-east-2 \ --profile prodadmin

To remove the policy:

1 2 3 4 5 aws lambda remove-permission \ --function-name CreateSQSQueue \ --statement-id DevAccountAccess \ --region us-east-2 \ --profile prodadmin

Invoke the Production Lambda Function from the Development Account:

1 2 3 4 5 6 7 aws lambda invoke \ --function-name '__LAMBDA_FUNCTION_ARN__' \ --payload '{"QueueName": "MyQueue" }' \ --invocation-type RequestResponse \ --profile devadmin \ --region us-east-2 \ output.txt

lambda_function.py:

1 2 3 4 5 6 7 8 9 import boto3sqs = boto3.resource('sqs' ) def lambda_handler (event, context ): queue = sqs.create_queue(QueueName=event['QueueName' ]) print ('Queue URL' , queue.url)

lambda_execution_role.json:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 { "Version" : "2012-10-17" , "Statement" : [ { "Effect" : "Allow" , "Action" : [ "logs:CreateLogGroup" , "logs:CreateLogStream" , "logs:PutLogEvents" ] , "Resource" : "arn:aws:logs:*:*:*" } , { "Action" : [ "sqs:CreateQueue" ] , "Effect" : "Allow" , "Resource" : "*" } ] }

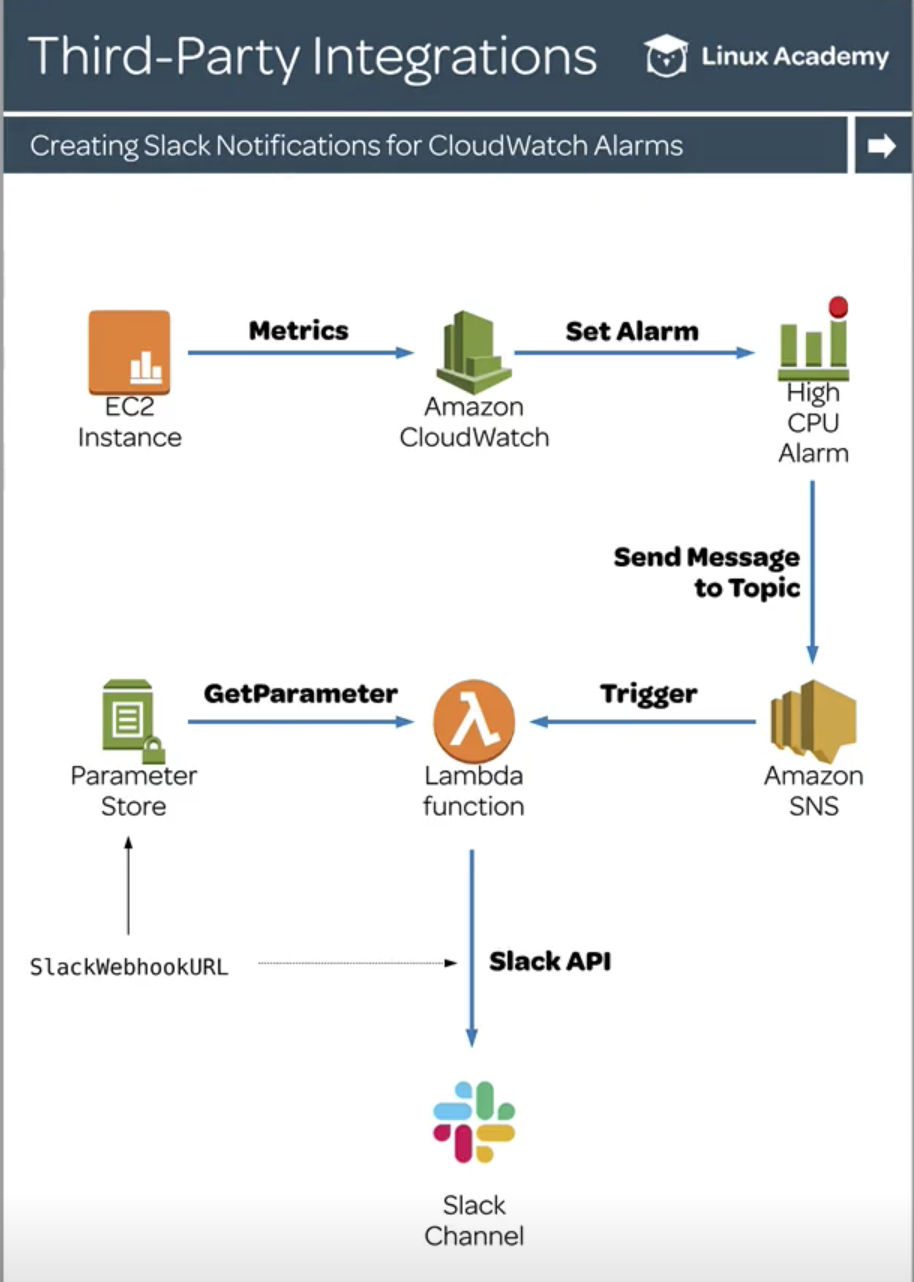

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 import jsonfrom urllib.error import HTTPError, URLErrorfrom urllib.request import Request, urlopenimport boto3ssm = boto3.client('ssm' ) def lambda_handler (event, context ): print (json.dumps(event)) message = json.loads(event['Records' ][0 ]['Sns' ]['Message' ]) print (json.dumps(message)) alarm_name = message['AlarmName' ] new_state = message['NewStateValue' ] reason = message['NewStateReason' ] slack_message = { 'text' : f':fire: {alarm_name} state is now {new_state} : {reason} \n' f'```\n{message} ```' } webhook_url = ssm.get_parameter( Name='SlackWebHookURL' , WithDecryption=True ) req = Request(webhook_url['Parameter' ]['Value' ], json.dumps(slack_message).encode('utf-8' )) try : response = urlopen(req) response.read() print (f"Message posted to Slack" ) except HTTPError as e: print (f'Request failed: {e.code} {e.reason} ' ) except URLError as e: print (f'Server connection failed: {e.reason} ' )

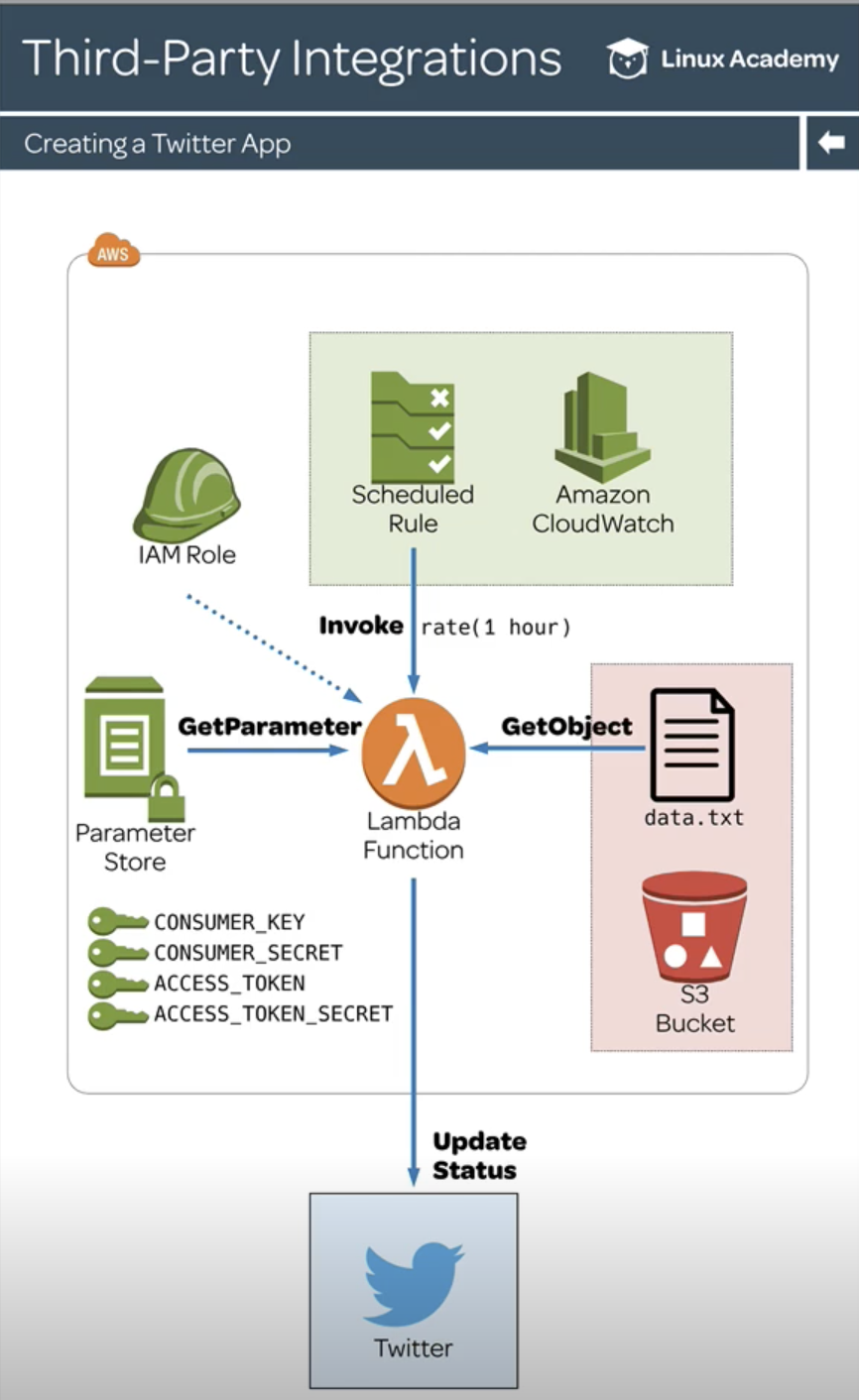

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 import osimport randomimport boto3from botocore.exceptions import ClientErrorimport tweepyBUCKET_NAME = os.environ['BUCKET_NAME' ] KEY = 'data.txt' s3 = boto3.resource('s3' ) ssm = boto3.client('ssm' ) def get_parameter (param_name ): response = ssm.get_parameter(Name=param_name, WithDecryption=True ) credentials = response['Parameter' ]['Value' ] return credentials def get_tweet_text (): filename = '/tmp/' + KEY try : s3.Bucket(BUCKET_NAME).download_file(KEY, filename) except ClientError as e: if e.response['Error' ]['Code' ] == "404" : print (f'The object {KEY} does not exist in bucket {BUCKET_NAME} .' ) else : raise with open (filename) as f: lines = f.readlines() return random.choice(lines) def lambda_handler (event, context ): CONSUMER_KEY = get_parameter('/TwitterBot/consumer_key' ) CONSUMER_SECRET = get_parameter('/TwitterBot/consumer_secret' ) ACCESS_TOKEN = get_parameter('/TwitterBot/access_token' ) ACCESS_TOKEN_SECRET = get_parameter('/TwitterBot/access_token_secret' ) auth = tweepy.OAuthHandler(CONSUMER_KEY, CONSUMER_SECRET) auth.set_access_token(ACCESS_TOKEN, ACCESS_TOKEN_SECRET) api = tweepy.API(auth) tweet = get_tweet_text() print (tweet) api.update_status(tweet)